Life for biomedical engineer Jordan Nguyen involves giving kids telekinesis-like abilities, learning from out-of-body experiences, and mind-controlled vehicles – all enabled by technology.

At just 31, Dr Jordan Nguyen is already impressive. He has been involved in numerous high-calibre projects, including in academia, as a speaker, and in efforts to improve the lives of those with disabilities. To this he recently added a nomination as a NSW finalist for the 2017 Australian of the Year, and a well-received debut TV documentary.

His enthusiasm for “a new era in human evolution” through intelligent machines is a big part of his message. Cutting-edge technologies will force us all to think differently about ourselves, even things as seemingly mundane as our posture.

“We had a camera rig of 84 cameras all the way around, and then we did some incredible number crunching where we had basically four terabytes of data for one minute of footage,” an excited Nguyen said of a recent experiment with the volumetric capture technology of Humense, a company he was an early investor in.

“We triangulated all the pixels in three-dimensional space and had a very dense point cloud of me. Once we finally got through that, I was able to put on a virtual reality headset and stand in front of myself, face-to-face.”

Seeing a super-real version of himself – which he compares to an out-of-body experience – made him realise what he knew but didn’t give proper mind to: one of his legs is shorter than the other. All this computation-on-steroids told Nguyen he needs shoe inserts. Chuckling at how ridiculous it might seem, he quickly adds there’s a serious note, and such virtual reality applications could help us overcome issues with how we’re wired, unable to properly imagine ourselves in the world.

“This will potentially change things in eating disorders as well. When you look into a mirror you generally don’t see what you would see if you were looking at another person,” he said.

“But when you meet a copy of yourself … I’m fascinated by how technology can make us self-reflect.”

We’re on the brink of being able to seriously extend our abilities, with human limits gazumped by technology. There’ll be a serious need to reconsider what makes us as we are.

But there are lots of reasons to be excited. Another giddy digression about how cool technology is and Nguyen apologises, unnecessarily, as he was in the middle of a riveting anecdote about trying to give a boy with cerebral palsy remote telekinesis abilities like the X-Men’s Dr Xavier, and explains that he barely slept the night before. As ABC Catalyst viewers have seen, getting as much done in a 24-hour day – sleep be damned – is a regular part of the young man’s life.

“For me, I’m constantly working out where my time is best spent that’s going to go towards improving as many lives as possible,” Nguyen said.

Superpowers

Working at the Cerebral Palsy Alliance, Nguyen met Riley Saban, a 13-year-old with severe cerebral palsy, who was being assessed for an eye-tracking device (through which he communicates). Aired in May, the two-part Catalyst documentary, Becoming Superhuman, chronicled a project where Nguyen’s company were able to devise a solution to give the teenager superpowers.

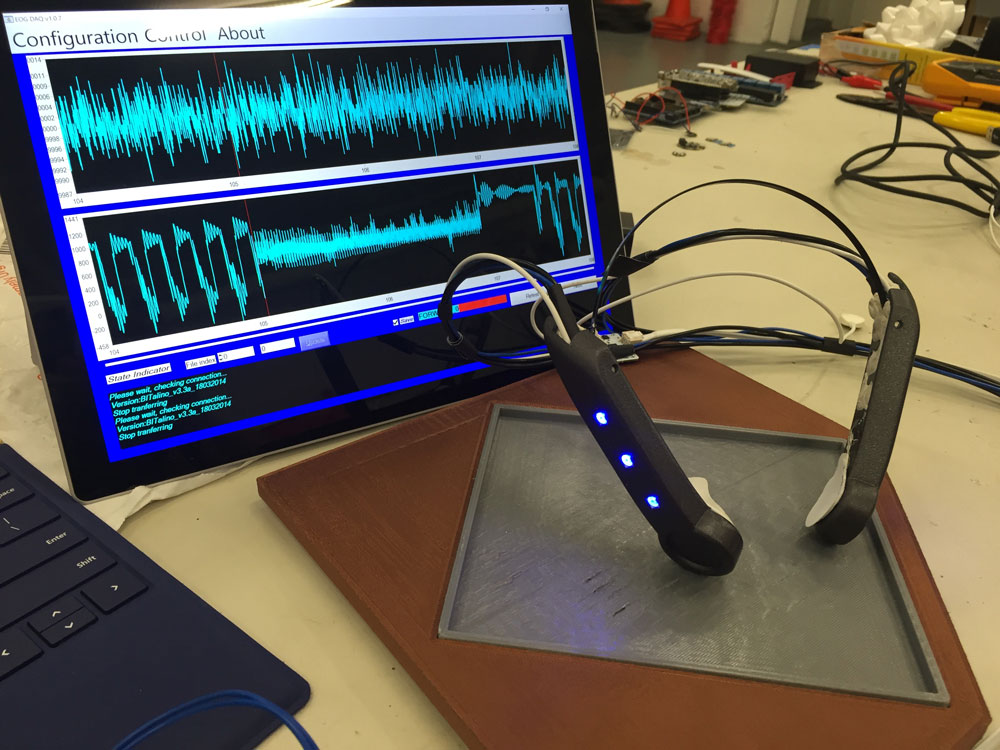

Filmed over a month, the team worked to harness Riley’s most reliable voluntary movements – those from his eyes – to allow the boy to turn electrical objects on and off. Using electrooculography (EOG), Riley’s brain activity corresponding to four different eye movements was detected, transmitted to a computer, interpreted by artificial intelligence and used to control the devices.

Inspired by Riley’s mother’s recollections that the boy used to try and turn lights off using the power of his brain, the team made it a reality.

“The cool thing that’s come since then is I’ve heard many stories from parents and school teachers that now there’s been kids who have been caught sitting there staring at lights or staring at a TV trying to make it change,” Nguyen said.

“They’re thinking of Riley as the superhero that they’re trying to mimic!”

For the second of the program’s two nights, the team decides to go one better and try to get Riley to control a car. For help, they turned to Darren Lomman, Chief of Innovation and Design, Ability Centre, which supports around 2000 people right across WA with a dedicated Country Resource Program. Lomman is, said Nguyen, “practically a mechanical engineering version of me”.

The West Australian was also pointed towards his current field by chance, when he rode his motorbike into a hospital car park. A conversation struck up with a wheelchair-bound former motocross champion led to a third-year project. Looking for an inspiring project before graduating and chasing mining work in the thick of the resources boom, he found what he was looking for: a hand-controlled motorbike for paraplegics.

“We’ve now been operating for 13 years, and worked on over 1000 projects here in WA, and formed a team of people, and lots of corporate sponsors and partners,” he added.

“Jordan’s [also] gone off and done his little thing.”

The two young engineers, who were born a couple of weeks apart, hit it off at an assistive technology conference in Queensland. About six months before the documentary aired, they finally got the chance to collaborate. Nguyen called looking for a vehicle for Riley and a half-built buggy was found, from a project that ran out of funding.

“It had a roll cage that opens up so you can get in and out, and different supports, and we started making it joystick-controlled so people in wheelchairs could operate this buggy, which meant we already had all the actuators in place behind the throttle and the breaks,” Lomman said.

“We put in motors to control the steering wheel and all that sort of stuff. For us to adapt that was not a quick process, but we just had to adapt our system to work with his mind-controlled interface. And before you know it, we had a mind-controlled buggy that was featuring on a TV show!”

The buggy got off a truck – in need of a tightened bolt or two after the cross-country trip – eight days before deadline.

Racing towards the finish

Eye control was tricky: if Riley wanted to watch where he was going, he couldn’t be using his eyes to steer.

The eventual design got around this by an echolocation-inspired idea of using Riley’s skin to sense, attaching vibration motors to his arm to let him know an obstacle was approaching.

To drive, eye movement signals were picked up by EOG, amplified and sent by cloud to a laptop at the buggy’s front.

The laptop was connected via USB to control the motors, and lidars on the front of the vehicle scanned the area in front.

“That was feeding information back into the tablet PC, up over the cloud, down to the box that Riley had next to him. That box was feeding vibration information to his arms.”

The last week was filled with all-night troubleshooting efforts. If it sounds like it went down to the proverbial wire, then that’s because it did.

“We took it to the park and tested it at about four in the morning to make sure that we could take control of it. Then we are calibrating like mad that next morning, after 45 minutes sleep – a quick 45 minutes sleep on the couch. About six in the morning, we got up and went, all right, let’s get back to it.”

Mind and action

It’s difficult to find an article on the bioengineering whiz that doesn’t mention his university pool accident. Enjoying some summertime high-jinx, a diving board became loose and the third-year electronics engineering student tumbled awkwardly into the pool. He heard a sickening crack, and, after getting out, was immediately unable to hold his neck upright.

After being completely unable to move – thankfully only for a day – Nguyen rethought his future. It would now be spent striving to improve the lives of those who don’t enjoy the mobility of the average person.

“It was from almost breaking my neck 10 years ago,” he said of his shift, which included a focus on the AI and biomedical subjects that would be needed to learn about enabling technology. He also started working harder, saying he was scraping by with a pass average before finding his purpose.

His final-year degree project involved building on the long-time smart wheelchair project his father has led at University of Technology Sydney (UTS).

“I have quite a number of PhD students and colleagues working on the wheelchair,” Professor Hung Nguyen said of the wheelchair, which is now back at UTS.

“This is quite a diverse performance we’re looking at, with Jordan working on a few angles.”

Nguyen Junior’s work was featured in eight annual conference proceedings of the IEEE’s Engineering in Medicine and Biology Society between 2007 and 2013. His 2012 PhD thesis presented a complete technical solution for control and navigation of the electric wheelchairs.

Demonstrated in real-time experiments and semi-clinical trials, the thesis’s significance earned it a place on the Chancellor’s List. Supervisor Dr Steven Su, writing in his recommendation, said he was astonished by the work “since it is very rare for a PhD to develop a complete solution of a challenging engineering problem not just in theory, but also in practice.”

“We used two cameras to see the relative distance and detect how far away is the subject,” Su said of the use of the stereo camera for depth perception. He adds that the system was based on a combination of stereoscopic and spherical vision cameras.

The monoscopic spherical vision camera created a panoramic range of vision for obstacle detection around the wheelchair.

The system’s configuration was biologically inspired by a horse – able to detect predators over a large field of vision while grazing, with only small blind spots at areas on its nose and posterior.

The semi-autonomous control is modelled on riding a horse. Having to control all movement using the pilot’s brain could be mentally quite draining, explained Nguyen.

“It would avoid the object in the way, it would avoid the people, autonomously,” he said.

“Kind of like riding a horse: if you ride it towards a tree, it probably won’t go into it. It’s that kind of idea.”

Work on the wheelchair gained a lot of attention in newspapers and news websites, and on TV programs such as The Project.

Up until this year, the smart wheelchair was being developed at Nguyen’s social business, Psykinetic, but it is now back with his father. The focus of Psykinetic (a combination of mind and action) is on eye-controlled technology. It might not be as ‘glitzy’ as anything mind-controlled, but it’s more practical.

“Really, the common thing that I find across most of the people I meet is it doesn’t really matter what level of disability they have, generally they have good control over their eyes just because of the different pathway of control from the brain,” Nguyen said.

“That’s high level spinal cord injury, high level cerebral palsy, motor neuron disease, many, many others that I’ve gone through, quite often the level of control over the eyes is still great.”

Nguyen has other documentaries planned. Meanwhile, he’ll continue to focus his biomedical projects on eye-controlled technology when not appearing on TVs and stages and reminding us that the future will bring with it some pretty cool superpowers.

“A lot of advances are making us think about what it means to be human, but also what it is, who we are, who we want to be,” he said.

“And I love that.”